The Future of Orchestration on the Edge

Device Native Deployment, Scaling, and Management

The world of software has advanced - where we can deploy models continuously, train them with new data, federate back the gradients, average an aggregated model, validate the new model, and push this new model all in matter of minutes.

None of this orchestration chain exists for the world of TinyML.

Our Origins

When we started HOTG, we were just two hackers in a garage wanting to push the limits of what was possible with AI outside of the cloud. When the TinyML revolution showed up in Silicon Valley, we dove in head first!

Our team’s background is in building large scale machine learning platforms and edge learning on phones. We have built deep knowledge in training neural networks for specific hardware with privacy preserving technologies such as federated learning. Through repeated efforts we mastered the art of reliably shipping production grade machine learning apps.

Currently, shipping AI to constrained devices is nowhere close to the experience of deploying models on the cloud. There is no single production grade toolchain, no easy way to target builds for multiple devices/environments, and the concept of repeatable reliable builds is a but a dream.

This realization and our deep roots from the world of cloud machine learning technologies forged our ideas of what the tinyVerse needs.

To understand why orchestration layer is needed in the device world, let us look at how it first emerged in the cloud native world.

A Brief History Of Cloud Native

In the beginning there was metal (🤘), bare metal. Servers were hosted in a data center, managed, and workloads ran directly on the host operating system. Until:

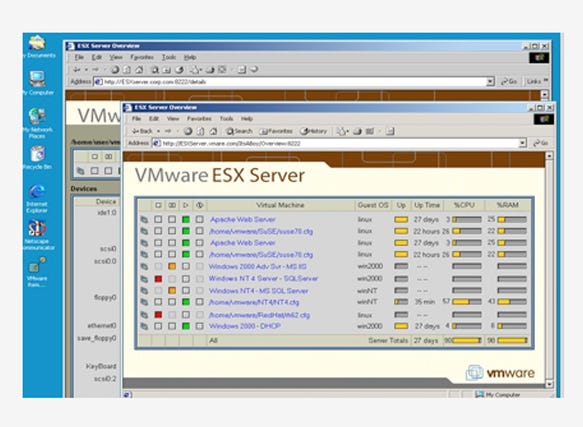

2002: VMWare launches Hypervisor in 2002

VMware releases the ESX Server 1.5

A single physical server could be virtualized allowing for multiple servers to be brought up on fewer physical servers (bare metal)

Virtualization allowed for building better tooling for scaling applications on the web.

2006: Amazon launches Amazon Web Services in 2006

Amazon introduces cloud computing for the masses.

Infrastructure moves to cloud

Data centralization becomes a trend

Pay per computing workload becomes a standard

Linux becomes the host operating system of the web

Over the next many years we see an acceleration of cloud based SAAS companies at web scale (billions of users). Web applications are somewhat monoliths and distributed computing still remains pretty difficult.

2008: Linux adds support for containers (LXC) in 2008

LXC enables a virtual environment with its own process and network space.

Borrowed from previous systems like Solaris/FreeBSD the world’s most popular operating system supports containers natively

2013: Docker launches containerization technology in 2013

Docker makes working on Linux containers extremely easy. The first real commercial containerization technology is born.

Computations become more portable and repeatable

Microservices become the de facto distributed computing paradigm

However, complexity of orchestrations start to grow enormously and soon we are the world of microservices dependency hell

2015: Google and open source launches Kubernetes in 2015

To manage a world with stateless microservices, we need an orchestration engine, which Kubernetes promises to deliver.

Wrangle microservices complexity via orchestrations

Containerized workloads become portable across cloud for the first time

The first set of CLOUD NATIVE projects are developed - ideally they can run on any cloud environment including on-prem.

Once the orchestration engines were in place, we saw the democratization of many AI technologies such as:

Amazon SageMaker

In fact, now, we live in a world of hundreds of MLOps platforms for the cloud.

Each invention listed above has been an accelerant of growth to the world we live today. We are expecting the same with tinyML and devices but only much sooner.

Device Native Orchestration

You must be wondering how does this relate to the world of devices and TinyML?

TinyML supercharges the possibilities on a device. For the first time, the software is not “static”. The power of machine learning is such that we don’t have to build rule based systems but let the rules emerge from the underlying data. This is what Andrej Karpathy calls software 2.0. What this means is that now we can swap the behavior of what a device is doing more dynamically, and in fact make it adapt to its environment based on the data that it samples. This is what we call as tinyVerse.

This leads us to our first hypothesis:

Right now, we are in the year 2002 of cloud computing with TinyML today. We are running direct workloads (tinyML model and apps) directly on the devices. However, we are in an accelerated mode of development in the device world.

HOTG is about to launch (Open Source) its virtualization and containerization technology called Rune in late May 2021.

By building the containerization tools, we are hoping to bring millions of developers to the world of embedded machine learning from diverse background.

This leads us to our second hypothesis:

We will experience a Cambrian explosion of ideas that will run on phones, browsers, PCs, and embedded devices in the next few years.

These ideas will get increasingly complex and will require sophisticated orchestration solutions. The cycle we saw with kubernetes after docker announced its containers. We consider ourselves lucky to be at the epicenter of the birth of this entire new industry (TinyML).

Which leads us to our third and final hypothesis:

This time it will be a bit different - privacy and data security will be a primary requirement in the design of systems. Unlike the cloud environment where the data was centralized, in device native world we expect the data to be distributed, insights to be federated/collected, and privacy will be a first class citizen.

At HOTG, our goal is to solve containerization and orchestration problems with tooling that include:

Our product stack

Rune - Build TinyML apps in a reliable and consistent way

Hammer - Deploy and manage production TinyML apps

Anvil (future) - Target multiple devices and device types using configuration instead of code

Saga (future) - Run and scale production systems of TinyML apps with resiliency, observability, and data management

Open source paved the way in defining the cloud native ecosystem, and we are hoping to do the same with HOTG for the device native ecosystem.

We will also be building enterprise features around all our tooling to make data privacy and model security a highest priority.

We are indebted to the open source community for making some great tools for shipping machine learning apps including #docker #kubernetes #tensorflow #pytorch and more.

Subscribe & follow us to check out more upcoming posts!