Observability for the edge

The known unknown

Welcome to another brand-new Monday, and we hope you had a good Thanksgiving / holidays!

Machine Learning models are not your “develop once and be done” type of software. Even the phrase “if it ain't broke, don't fix it” might not apply to them. They need constant attention, monitoring, and observation to examine if they are behaving correctly in the production system.

In the case of ML models on the edge, the problem only gets worse.

What makes machine learning different?

To understand why machine learning models need to be carefully monitored and observed, we need to grok what is encoded in the model.

To grossly oversimplify, in traditional programming your code reflects the rules/logic of the algorithms implemented. At runtime, your program provided with sufficient time and memory, will execute for the given inputs, to arrive at desired results.

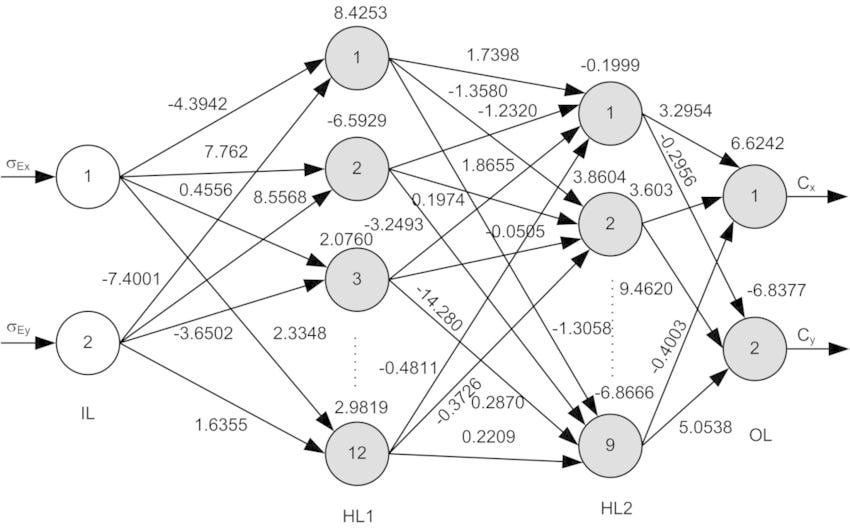

In machine learning, you have copious amounts of data, which is labeled, and the inputs that led to them. However, you don’t know what logic or rules map the inputs to the outputs. The machine learning program produces a “model” which (in an ideal world) encodes these rules. The schematic below explains the simple (albeit trivialized) mental model (pun intended).

Source: https://futurice.com/blog/differences-between-machine-learning-and-software-engineering

A more nuanced understanding is required why machine learning is quite different. Andrej Karpathy has written a good post on what makes machine learning (specifically deep learning) software 2.0.

In contrast, Software 2.0 is written in much more abstract, human unfriendly language, such as the weights of a neural network. No human is involved in writing this code because there are a lot of weights (typical networks might have millions), and coding directly in weights is kind of hard (I tried).

Source: https://karpathy.medium.com/software-2-0-a64152b37c35

As you can see, monitoring machine learning is different because you are required to monitor whether the output program (the model) is behaving correctly, as opposed to whether the program is behaving correctly.

Monitoring vs Observability

We have been using a few technical words such as monitor and observe, and we would like to define them better to differentiate and arrive at the crux of this post.

Monitoring:

Monitoring is about having a clear view on the operational aspects of a system. It typically answers questions such as:

Is my system working currently?

Is the performance of the system acceptable?

According to the Google - Site Reliability Engineering handbook monitoring is defined as:

Collecting, processing, aggregating, and displaying real-time quantitative data about a system, such as query counts and types, error counts and types, processing times, and server lifetimes.

Observability:

Observability is a derived information to help understand if the system is behaving correctly. The “correctness” of a system is context specific.

Observability is the ability to measure the internal states of a system by examining its outputs. A system is considered “observable” if the current state can be estimated by only using information from outputs, such as sensor data.

Observability for machine learning

Observability for AI system is critical to ensure the correctness of the system behavior continuously

Observability becomes extremely important for ML systems (along with monitoring). The rationale for this should be straightforward given that ML models are programs themselves as opposed to results.

ML systems have a few additional nuances that make observability important, as described below.

Concept drift

A shift in the actual relationship between the model inputs and the output. An example of concept drift is when macroeconomic factors make lending riskier, and there is a higher standard to be eligible for a loan. In this case, an income level that was earlier considered creditworthy is no longer creditworthy.

Prediction drift

A shift in the model’s predictions. For example, a larger proportion of credit-worthy applications when your product was launched in a more affluent area. Your model still holds, but your business may be unprepared for this scenario.

Label drift

A shift in the model’s output or label distribution.

Feature drift

A shift in the model’s input data distribution. For example, incomes of all applicants increase by 5%, but the economic fundamentals are the same.

The drifts are even more at play in the case of models on the edge. The environments on the edge are not static, and hence your models cannot be as well. Therefore, observing ML systems becomes critical to measure the drifts, and continuously improve the model.

Princeton hackathon talk

Observability is a deep topic and requires more than a post to go into further details. Our Co-Founder, Kartik Thakore recently gave a talk at the Princeton’s hackathon, touching on this fascinating topic. You can watch the whole video where he goes into more details while discussing the open issues in the field of edge computing.

Observability with HOT-G

Our team has extensive experience deploying real world ML systems in healthcare, finance, grids, and more. We believe that building the model and deploying it is only the first part of the puzzle. To truly manage a complex ML system requires tools and systems for both monitoring and observability.

Observability will be a key feature on our Hammer Forge platform sometime in 2022.

If you would like early access to Forge, just add your information below - we plan to onboard select customers in January 2022!

Follow us on our socials:

Twitter @hotg_ai and @hammer_otg | LinkedIn | Discord