RuneBERT - NLP on the Edge

Bringing NLP to embedded devices to unlock language

NLP (Natural Language Processing) is a subfield of Artificial Intelligence that focuses on developing algorithms to give computers the ability to understand speech and text. It’s something you are already using daily when you talk to devices, “OK Google,” “Alexa,” or when you are interacting with a chatbot - all these are applications of NLP.

The world of NLP and AI had a significant breakthrough with the advent of Transformer models in 2017.

Google and associated researchers announced this breakthrough with their paper titled - Attention is all you need which is based on the transformer architecture.

The key ideas from those resources are as follows:

The sequential nature of RNNs also makes it more difficult to fully take advantage of modern fast computing devices such as TPUs and GPUs, which excel at parallel and not sequential processing. Convolutional Neural Networks (CNNs) are much less sequential than RNNs, but in CNN architectures like ByteNet or ConvS2S the number of steps required to combine information from distant parts of the input still grows with increasing distance.

In contrast, the Transformer only performs a small, constant number of steps (chosen empirically). In each step, it applies a self-attention mechanism which directly models relationships between all words in a sentence, regardless of their respective position.

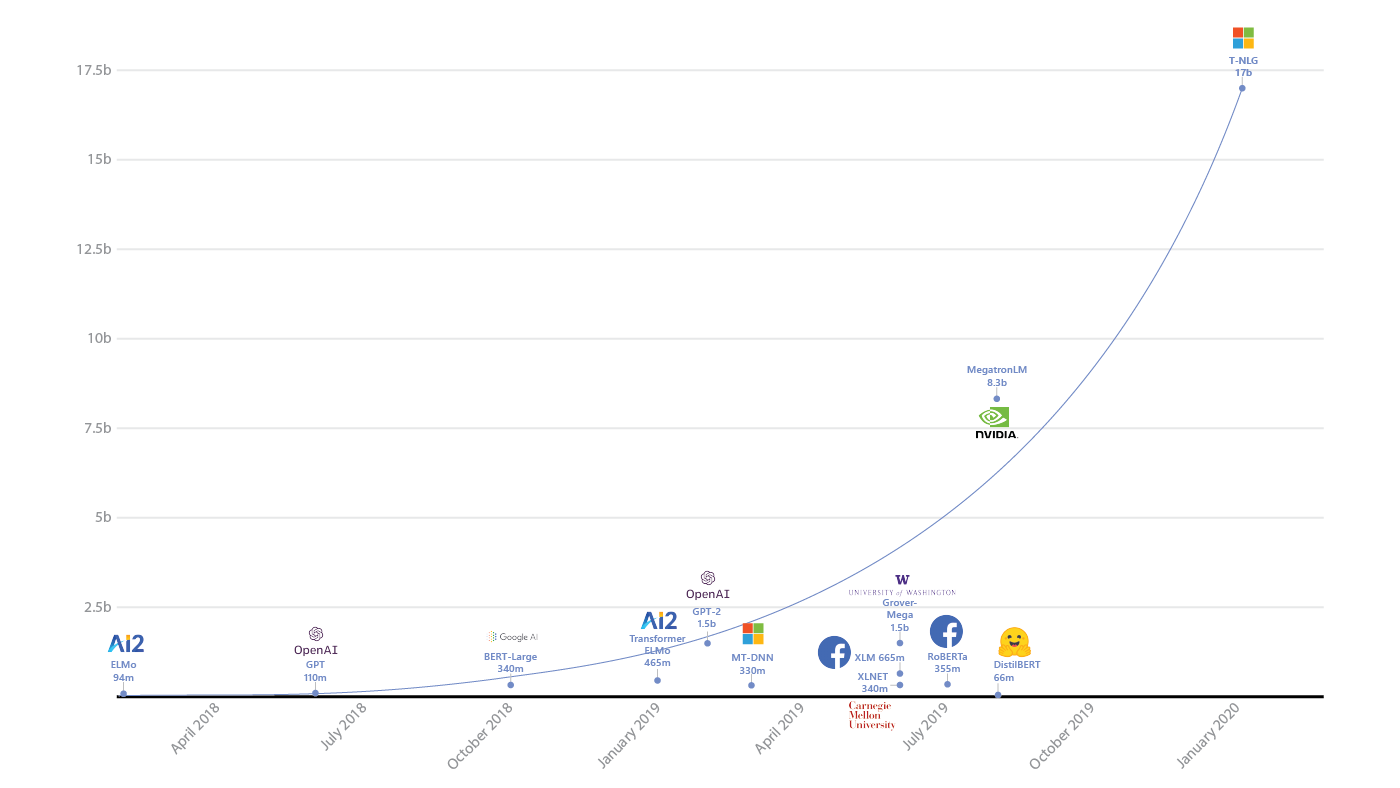

The breakthrough with the Transformer technology unleashed several big language models from Google, Facebook, OpenAI and others such as BERT (Bidirectional Encoder Representations from Transformers), RoBERTa, and GPT-3.

We are in an era of large language models which are being trained on billions and even trillions of parameters.

MobileBERT

The large language models mean that they cannot be deployed on devices (edge computing). However, researchers and community have been working on compressing these large language models and one such model is MobileBERT.

In this paper, we propose MobileBERT for compressing and accelerating the popular BERT model. Like the original BERT, MobileBERT is task-agnostic, that is, it can be generically applied to various downstream NLP tasks via simple fine-tuning.

We believe the future of machine learning will also be tiny and suited for embedded devices. We have been surrounded by a lot of devices like watches, light bulbs, speakers, headphones, and cameras, etc. Have you ever wondered what if we make these devices smart? Embedded machine learning is all about enabling ML on these embedded devices, to make them smart.

In the rest of the post, we talk about how we made MobileBERT ready with Rune. The Rune is immediately available for anyone to use on embedded devices (phones, coral, raspberry-pi and more). Please note that due to memory limitations of some mobile browsers this Rune is not supported there yet.

“RuneBERT”

We have built Rune, a container for embedded machine learning applications.

We keep on providing weekly updates on our experiment with new ML models and keep adding new runes to our fleet. A few days ago, we explored our rune with NLP models. The result was beautiful, we created a BERT rune.

The BERT TFLite model is about 96MB; it will require a capable embedded device (phones, coral.ai, raspberry-pi, and jetson nano etc.)

We want to call this RuneBERT following the tradition of BERT spinoffs :)

See RuneBERT in action on a mobile phone app below:

Integration of BERT in rune

The next big question is how to integrate BERT in the rune. The natural language data must go through pre- and post-processing steps before feeding into and getting out of the ML model.

Let’s take the example of BERT QA and understand how the data flow works. This model takes a passage and a question as input then returns a segment of the passage that is most likely the answer to the question.

After running rune CLI’s model-info on the bertQA.tflite

$rune model-info bertQA.tflite we can see the model-info:

Inputs: input_ids: Int32[1, 384] input_mask: Int32[1, 384] segment_ids: Int32[1, 384]Outputs: end_logits: Float32[1, 384] start_logits: Float32[1, 384]

Looking at the input of the BERT QA model, it takes lists of integers as input but earlier we mentioned that model takes a paragraph of words and a question as input.

How do we reconcile this?

This is where the concept of pre- and post-processing of the data comes in. In order for our devices to understand a paragraph of text, we need to break that word down in a way that our machine can understand. That’s where the notion of tokenization comes in when dealing with NLP. The tokenizer will first split a given text into words (or parts of words, punctuation symbols, etc.) usually called tokens. The tokens are converted to numbers through a look-up table. We build a tensor out of them and feed them to the model.

Example: Original sentence: “This is a nice sentence.”

tokens: ['[CLS]', 'this', 'is', 'a', 'nice', 'sentence', '.', '[SEP]']input_ids: [101, 2023, 2003, 1037, 3835, 6251, 1012, 102]To make this all possible in the rune, we created three new proc-block:

Tokenizers

Argmax

Text_extractor

The Tokenizer proc-block takes a passage and a question as input and gives us input_ids, input_masks, segment_ids as output which are then fed to the model. The model gives us start_logits and end_logits as output. The start and end logits contain values for all of the words in paragraph corresponding to how good they would be a start and endpoint of the answer for a given question; in other words, each of the words in the input receives a start and end index score/value representing whether they would be a good start word for the answer or a good end word for the answer. The answer would be from the start logit max value index to the end value logit max value index. The next step is to find out the max value index in the start and end logit. For this, we will use our Argmax proc-block which will scan the whole array to provide us max value index. Finally, the Text_extractor proc-block will convert the numbers back to the sentence. This output sentence will serve as the answer to the question.

For the technically astute readers, we wrote a pre- and post-processing pipeline that all run on the EDGE!

HOT-G is building the edge-MLOps framework and all in open source!

How can I use it?

You can follow these instructions to replicate our results on your device. We have also updated bert.rune on our runic mobile app. We have demoed it on this passage in the video above.

This changes everything!

We think this is a critical achievement for our team and HOT-G’s roadmap!

The successful launch of RuneBERT has opened the door for us in the field of NLP. Now you can create runes for:

Speech Recognition (speech to text)

Machine Translation (text conversion of one language to another language)

Text to Speech

Text Classification, and several other applications

To give you an idea of how we are bringing edge first, private, and adding vision, sound, and now language to devices will be a massive game changer. To highlight a few examples:

Adding speech synthesis for language to toys - Tiny thinking toys! They don’t have to be internet connected and will keep the data private.

Text synthesis in both interactive and non-interactive mode. Apps that help you auto complete on the edge - privacy first editors.

If you are interested in commercial application of RuneBERT for your product and need HOT-G’s support (and all the other goodies we are launching end of the year to support your edge computing models), then drop a note to akshay@hotg.ai!

Resources

Mobile bert model from TF hub - https://tfhub.dev/tensorflow/lite-model/mobilebert/1/default/1

Rune repository for BERT - https://github.com/hotg-ai/test-runes/tree/master/nlp/bert

Specific Rune file that you can download and use on Runic app - https://github.com/hotg-ai/test-runes/blob/master/nlp/bert/bert.rune

Rune CLI - https://github.com/hotg-ai/rune/releases

Follow us on our socials:

Twitter @hotg_ai and @hammer_otg | LinkedIn | Discord | Discourse