To the Edge and Beyond

My bets for edgeML in 202X

In 2017, I was looking for a solution to solve privacy issues with Healthcare AI. At doc.ai, my colleagues and I had put a lot of effort into figuring out how to build computer vision models for medical research. This involved collecting highly sensitive images for training and inference. The privacy and regulatory issues were a nightmare. In an attempt to avoid dealing with anonymizing data sets we built the first on-device ML (we had to hack TensorFlow directly to make this work) with federated learning. At this point, I realized that the future of ML will be on smaller and smaller devices, which lead me to TinyML and TensorFlow Micro.

Throughout my career, I have always been the “hack-anything-with-glue-or-bolts” guy. My approach to most hacks has been to find the quickest path from a rough idea to a Rube Goldberg machine. I am driven to just get something working “end to end”. Hack first, then understand later has always been my ethos. So it came as a surprise to me that this was very difficult to do in TinyML and EdgeML.

I admit it, I am spoiled coming from a background of device-agnostic development that has a plethora of amazing developer tools. The openness of the web and cloud have created a massive playground where developers can build almost anything.

I started my development journey (interestingly) when I was studying for my MCATs as a Biology major at the University of Western Ontario. Much to the dismay of my parents, I fell in love with writing code. I was addicted to the feeling of telling a computer to do something and seeing it happen. The developer feedback loop was my first dopamine hit and I have never looked back.

Similar to many of my colleagues, I started working on games and soon fell deeply in love with the Perl community and kick-started the open-source PerlGameDev community. The Perl community instilled in me the core principle of Tim Toady (TIMTOWTDI). TIMTOWTDI stands for “There is more than one way to do it.” Larry designed Perl to be a language where developers were free to do almost anything in any way they wanted to do it. I strongly believe in that freedom of expression in computing and even more importantly, the freedom to compute.

14 years later, I have built products, companies and hacked on everything from DevOps, frontend development, federated learning, and large mission-critical ML pipelines that serve 140 million users to small hacks during the pandemic. Throughout all of these projects and products, I have never been as excited as I am for the upcoming year in Edge and EdgeML.

I started playing with the edge deeply in 2018, tinkering in my backyard shed with Arduino devices, tiny FPGAs, and piezoelectric ceramic sensor devices, trying to shove ML models into places they shouldn’t go.

The prototype below was my first attempt at making an ML system that can program itself using signal data and containers stored in a local device. Using the Arduino Nano BLE 33 I was able to reprogram a TinyFPGA BX to run various models deployed purely in the form of a web-assembly container. The implications of an entire ML deployment pipeline running on various chipsets opened my eyes to the future.

Needless to say, the development process was pure hell. The precursor to Rune (our open-source containerization tech) was several scripts and tools I developed to make deployment possible. The dopamine hit of a fast feedback loop between model building and seeing it work on the device was lackluster before having containers working. In the process of improving the development workflow for my hacks, I built HOTG’s technology.

I dreamed of a world where I could use declarative languages to have my ML application work on any computing device with no constraints. We are well on our way to accomplishing that dream and 2022 will accelerate that in significant ways.

The future of Tiny/EdgeML will be accelerated with advances in ML Compilers which will create problems of their own.

The number of AI-enabled devices is exploding and the variety of different architecture and especially different software, powering them. Each of these classes of devices has detailed nuances that require deep expertise to build complete applications. From CoreML to HS4ML, there is an explosion and wide surface area of knowledge an application developer would need to know. The success of your application is now tied to the hardware it runs on.

Deploying to production in the cloud is fairly simple. It is a matter of beefing up your infrastructure and tweaking your terraform scripts. Deploying to production on the edge is a complex iterative process of selecting the best model, quantization, processing unit, and sensors. This is especially complex when we start seeing product teams working on mission-critical systems.

A big part of the coming innovation is focused on ML Compilers from a plethora of companies and open source initiatives. This hotbed of competition is poised to change how ML models are optimized for various devices. However, this still doesn’t solve the problem for the application developer. The joyful, tight iterative loop from idea to production and users using your EdgeML application still doesn’t exist in this ecosystem. ML compilers are a fundamental technology that needs to be solved and 2022 is the year for this! There will be many more challenges that need to be solved before EdgeML is at the same stage as CloudML.

I am confident that the amazing EdgeML space that is growing now will solve a lot of these technical issues. The space is indeed well funded and I expect this to grow.

On a side note, I am also particularly excited about Mythic AI which is working on analog matrix processors to take on the king of deep learning, the GPU. Indeed, this is an extremely innovative space, and the software stack that follows these chips will be just as diverse and innovative.

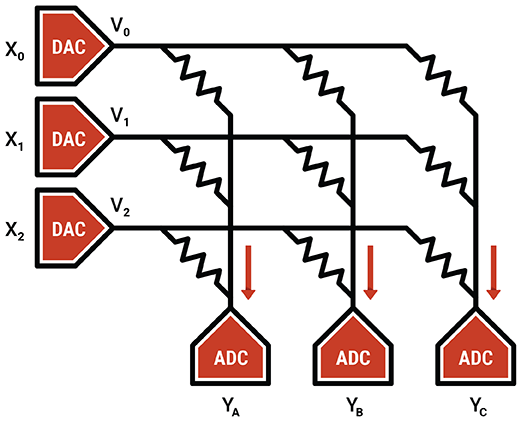

Analog computing provides the ultimate compute-in-memory processing element. The term compute-in-memory is used very broadly and can mean many things. Our analog compute takes compute-in-memory to an extreme, where we compute directly inside the memory array itself. This is possible by using the memory elements as tunable resistors, supplying the inputs as voltages, and collecting the outputs as currents. We use analog computing for our core neural network matrix operations, where we are multiplying an input vector by a weight matrix.

https://www.mythic-ai.com/technology/

The revolution/evolution in hardware, computing, and compilers will lead to a very natural conclusion.

Fragmentation is here to stay. Fortunately, we already know how to solve this problem.

For the foreseeable future, fragmentation will be the norm in the EdgeML landscape. As reported by @ChipPro in her recent blog post, both the device backends and ML frontends are rapidly expanding.

EdgeML fragmentation is very analogous to the early mobile development landscape where the iOS and Android market. In the last 5 years alone several tools and frameworks such as React-Native have solved this problem. I anticipate the same happening with projects like Microsoft’s ONNX and Apache TVM. In preparation for such frameworks, HOTG is planning on a stack agnostic solution to provide the best-in-class experience for product teams. I strongly believe, that at some point there will be more edge computing products than cloud or internet-connected devices. In such a world, product teams will need a way to rapidly iterate, collaborate, secure and deploy EdgeML applications.

Beyond 2022 to 202X: Product teams building applications on top of Edge/TinyML in a multi compute environment

At HOTG.AI, we are focused on what comes next for the edge - frameworks, and tools that allow teams to deploy production ML applications and products. With our enterprise offering, Hammer Forge, we plan on releasing tools to allow developers and product managers to collaborate on building amazing EdgeML products from designing ML frameworks to rapid prototyping to deploying with security and telemetry backed in.

If you want to learn more about Hammer Forge, join the waitlist below!

Want to geek out with me on all things EdgeML? Let’s connect at kartik (at) hotg.ai or on my twitter @KartikThakore

HOT-G Socials:

Twitter @hotg_ai and @hammer_otg | LinkedIn | Discord