Introducing EdgeSecOps - Securing Models and Workloads on the Edge

Securing the most valuable IP - models on the edge

Our team have some news to share! We’ve launched our new website: https://hotg.ai/!

We can take a short intermission here for your browsing pleasure! What do we think? :) Don’t forget to add yourself to the waitlist if you want to be notified when our SaaS platform is live (it is almost here!). Now, onto this week’s discussion!

Security out of the box for Edge Computing

In the previous post, our co-founder talked about the foundations of our edge computing platform. You can read more about it in the excerpt link below:

In that post, we articulated that Security was a critical foundational component that our platform should offer. To recap, we shared that:

Many enterprises collect unique data that can be used to train models which then end up becoming intellectual property (IP).

Development cost of production version of *good* AI models can easily reach millions of dollars. Therefore, these models are very valuable assets which must be well protected. In the centralized server solutions, those models are well hidden behind firewalls, the external world has only API access to those models.

On the contrary, deploying these models to edge devices means opening to several types of new attacks, such as:

Direct model theft: Here the attacker steals the exact weights of the model to use it for their own benefit.

Indirect model theft: Here the attacker queries the model repeatedly to automatically generate a labelled dataset. They can then train their own model to replicate the behavior of the original model without spending a lot of effort on labeling a dataset himself.

GAN attacks to reverse data from model

A whole bunch of attacks on the machine learning models including identifying personally identifiable info (PII)

In this post, we want to lay down the foundation of how to think about these security issues at the process, platform, and cultural level when working on edge computing. We will also briefly touch up on the solutions and research our team is building. We will also introduce you to the concept of DevSecOps and our take on it for edge computing called EdgeSecOps.

EdgeSecOps

DevSecOps is beginning to be well known in the world of cloud computing, and is described by RedHat as following:

DevSecOps stands for development, security, and operations. It's an approach to culture, automation, and platform design that integrates security as a shared responsibility throughout the entire IT lifecycle.

It is about bringing the culture of security mindset to DevOps.

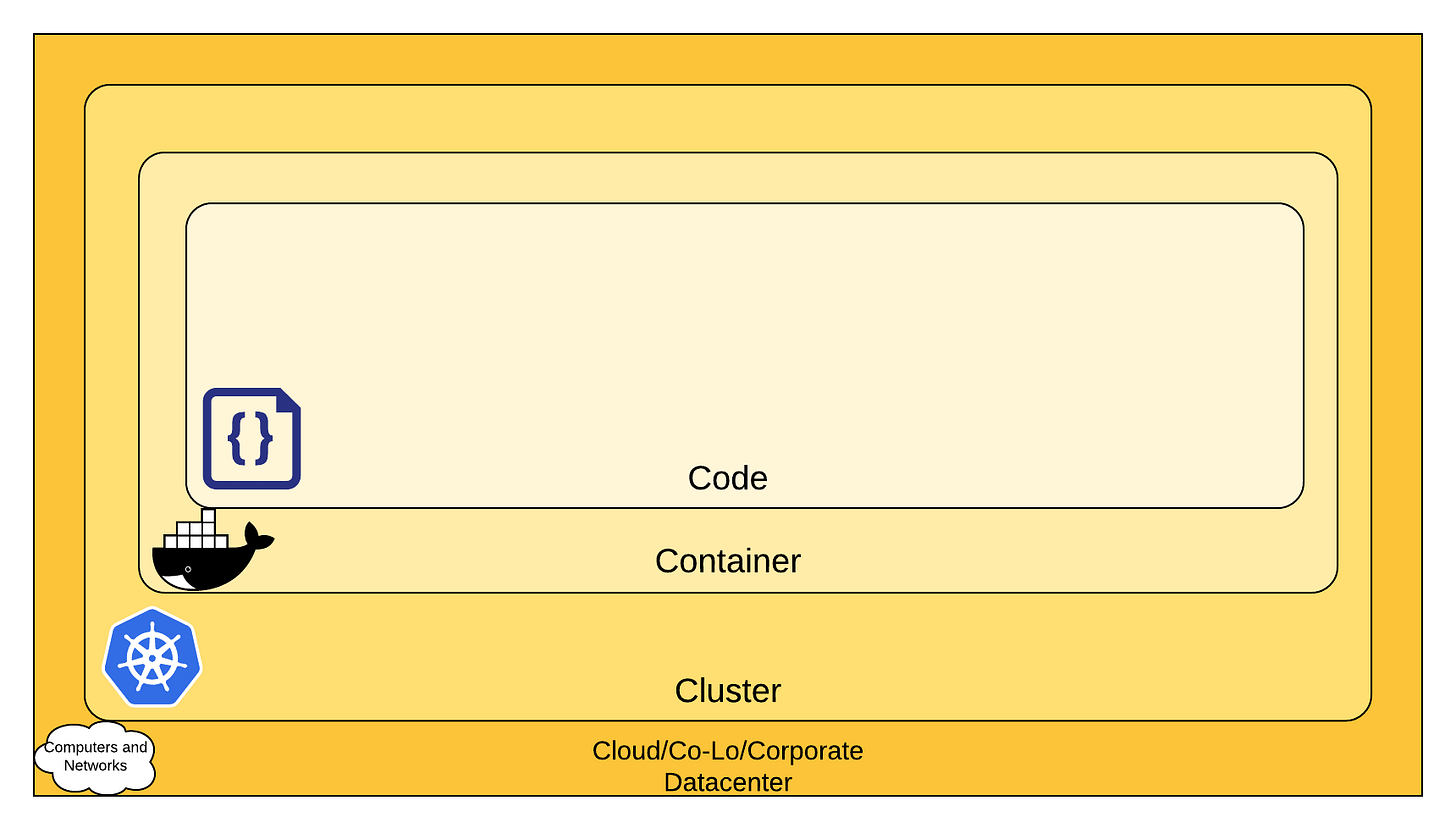

An excerpt from the excellent DevSecOps book refers to cloud native security also known as 4C’s include the following:

Code security

Container security

Cluster security

Cloud security

ref: Kubernetes 4Cs

If we want to extend this to edge computing, henceforth christened EdgeSecOps the equivalent will be the following from our point of view:

Note: This is a concept architecture and not the actual implementation

The primary concern of EdgeSecOps is to secure the computation on the device. It has less to do with physical device security and device monitoring which are critical in its own way, but some of this is being addressed at the firmware level.

At HOT-G, EdgeSecOps is a key area of focus, where we are building some techniques to help protect against attacks under some threat models. The important thing to know is that the threat models for each use case drive the solution.

To summarize: EdgeSecOps is the process, culture, and tools for securing models and workloads on the edge device.

HOT-G’s research and solutions in EdgeSecOps

We are building several edge native and cloud native solutions for the EdgeSecOps space. We are rolling some of these features in our enterprise offering, which you can find more details here: https://hotg.ai/pricing/. Several of the items below are still in early exploratory phase at HOT-G.

Watermarking models and registering models: Watermarking allows companies to check if other companies are using their exact models. Watermarking, together with registering models, allows companies to prove that their models were stolen, and it may significantly help companies in legal proceedings.

Cryptographic and obfuscation techniques to protect IP:

These techniques make it much more difficult to steal the model. Ideally, those techniques make reverse engineering (RE) of a model unsustainable - the cost of RE is similar to the cost of building a model including collecting the data.Zero Trust: Zero Trust is quickly emerging as a security model as a “perimeterless security” measure - that is to defend against attacks when the origin of the attack is inside the traditional perimeter-based security (on cloud or on-prem typically). With Zero Trust, we can bring identities to workload (example SPIFFE for cloud). Once we have cryptographically signed identities, this means anything running on a “machine” has been verified by a trust system. We can then extend this model from identities to agent-based policies to control granular level permissions (example OPA for cloud). Under certain threat models, Zero Trust provides an acceptable level of security. We are evaluating how to bring these concepts to the edge devices and most of this is now tractable because we have container-based technology for the edge.

Observability: We talked about observability before. Observability refers to being able to monitor the ML model as it is making predictions. Specifically for security, this allows us to detect anomalous input patterns that might indicate an attack on our system. An abnormally large number of requests, for example, might be caused by an attacker who is trying to automatically label his own dataset to train their model. We are also interested in monitoring the input data distribution to detect inputs that deviate from the typical inputs. We could then, for example, detect adversarial attacks where the attacker is using specially crafted inputs to fool the system.

EdgeSecOps is an exciting field we are trying to establish and drive, to bring the type of security mindset needed when deploying AI to the edge.

If you are a security researcher or AI researcher in this space and want to collaborate with us, reach out to us at akshay AT hotg.ai.

*If you love our content, consider supporting our team by subscribing and sharing TinyVerse! We cannot wait for you to see what we have in store in the pipeline this year!

HOT-G Socials:

Twitter @hotg_ai and @hammer_otg | LinkedIn | Discord