In one of our earlier posts Rune-ifying Audio Models, we briefly displayed an example of a mobile application that uses a rune.

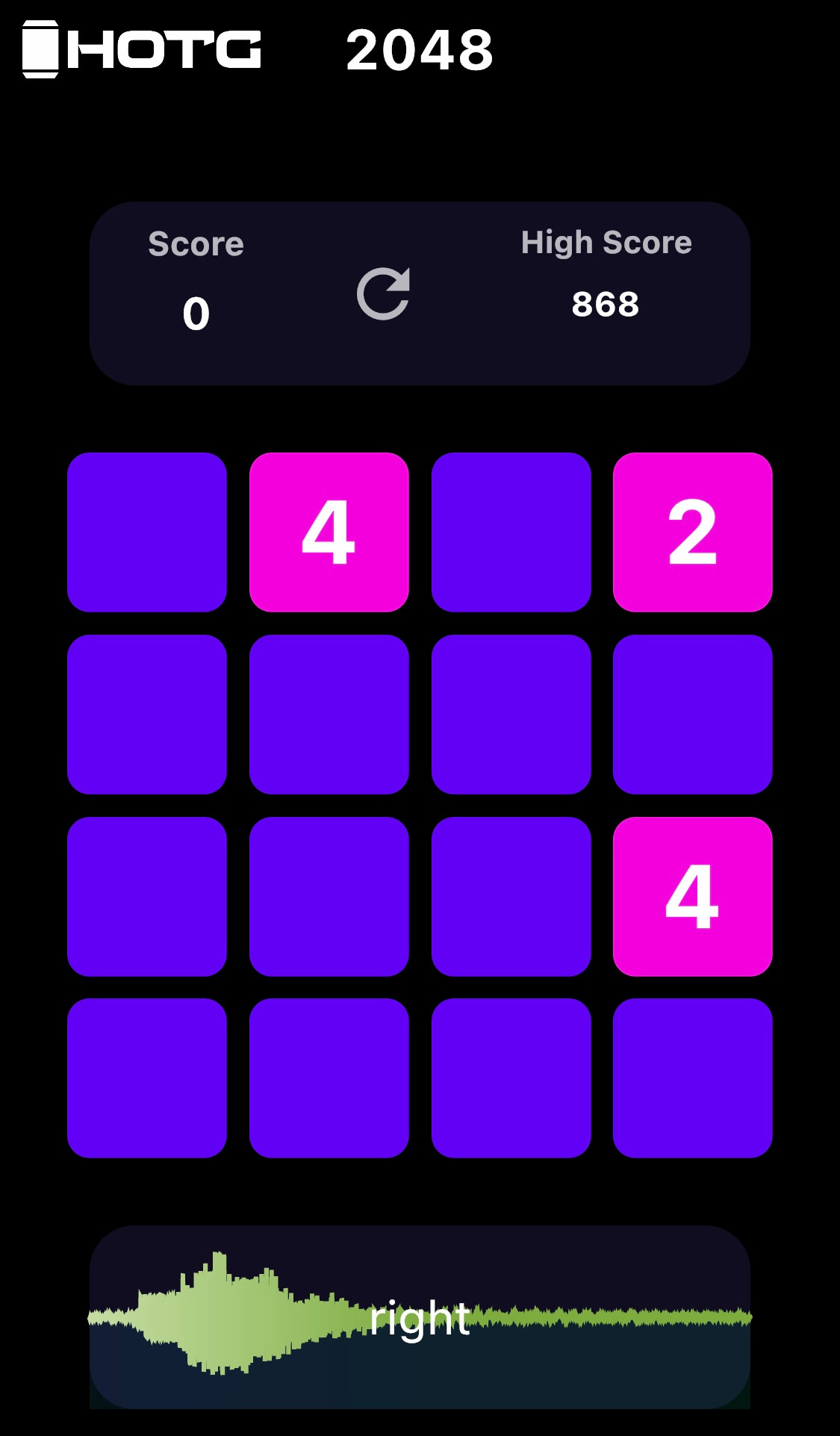

We modified 2048 which is a sliding tile puzzle video game with the microspeech rune which uses a sound classification model to control the game's movements with our voice, replacing its swipe controls.

In this tutorial, we'll further explore the process of upgrading applications using runes using 2048 as an example. The game was built using Flutter which is an SDK that enables cross-platform application development - meaning any application can easily be deployed to a variety of devices.

“Hey Siri”

Now, any developer can use our open source Rune container with microspeech model and add voice based AI gestures to their app easily! You can go beyond “Hey Siri” or “Hey Spotify” wake word types of applications for your audience by following along this tutorial.

Installation

We would need to install Flutter on our operating system to develop the application.

Once the installation is complete, create a Flutter project.

$ flutter create exampleAudio Streaming and Rune

Our first step is to be able to record an audio stream from the device's microphone. Create lib/runic/audio.dart file to work on audio streaming and runes.

import 'package:mic_stream/mic_stream.dart';

initRecording() async {

stream = await MicStream.microphone(

sampleRate: 16000,

channelConfig: ChannelConfig.CHANNEL_IN_MONO,

audioFormat: AudioFormat.ENCODING_PCM_16BIT);

}Uses the mic_stream package to record an input stream.

Devices don't record an audio sample using a standard sample rate. Hence, we would also need to handle the conversion of the sample rate to 16000 Hz which is what our model requires as an input. The code for this step is quite long - It can be viewed in our Github repository.

Now that we have an audio stream, we need to use it with our microspeech rune which is stored in the assets/ folder.

We can initialize our rune with the following code block.

import 'dart:ffi';

import 'package:runevm_fl/runevm_fl.dart';

...

initRune() async {

try {

bytes = await rootBundle.load('assets/microspeech.rune');

bool loaded = await RunevmFl.load(bytes!.buffer.asUint8List()) ?? false;

if (loaded) {

String manifest = (await RunevmFl.manifest).toString();

print("Manifest loaded: $manifest");

}

} on Exception {

print('Failed to init rune');

}

}Loads the microspeech rune.

The entire process can be run continuously.

run(List<int> stepBuffer) async {

try {

runningModel = true;

setAmplitude();

if (amplitude > 80) {

String? output = await RunevmFl.runRune(Uint8List.fromList(stepBuffer));

onStep(output);

freeze = true;

await Future.delayed(const Duration(milliseconds: 1000), () {});

freeze = false;

} else {

onStep(

'{"type_name":"&str","channel":0,"elements":[""],"dimensions":[1]}');

await Future.delayed(const Duration(milliseconds: 100), () {});

}

runningModel = false;

} catch (e) {

print("Error running rune: $e");

}

}Runs the rune every second to constantly record the audio input from the microphone.

There are some minor additions required for our audio stream and rune to run seamlessly. The entirety of the code can be found at lib/runic/audio.dart.

Game Controls

lib/2048/ contains all our code for the 2048 game. There is very little additional code required to get the game to work with the rune. We need to add code for the rune to control the movement of the tiles (i.e. ← ↑ → ↓ swipe gestures are replaced by the output of the microspeech rune "up", "down", "left", "right").

import 'package:hotg_2048/runic/audio.dart';

...

super.initState();

_audio.onStep = (String? output) async {

if (output != null) {

lastCommand = jsonDecode(output)["elements"][0];

/*

0 = up

1 = down

2 = left

3 = right

*/

switch (lastCommand) {

case "up":

{

handleGesture(0);

}

break;

case "down":

{

handleGesture(1);

}

break;

case "right":

{

handleGesture(2);

}

break;

case "left":

{

handleGesture(3);

}

break;

default:

{

//nothing

}

break;

}

}

setState(() {});

};

_audio.startRecording();

}Code located in lib/2048/home_mobile.dart which decodes the rune output, and controls the tile movement direction using the handleGesture() function.

Assets and Permissions

Flutter needs to recognize that the microspeech rune is an asset. It should also be added as an asset to the projects Pubspec.yaml.

flutter:

assets:

- assets/microspeech.runeRun

flutter pub getto update packages.

Our final step is to provide our application permission to use the microphone.

Android

Add this line to the AndroidManifest.xml.

<uses-permission android:name="android.permission.RECORD_AUDIO"/> iOS

Add the following key and string to Info.plist.

<key>NSMicrophoneUsageDescription</key>

<string>Microphone access required</string>Our Github repository contains the entirety of our code for the 2048 game.

To run the game on your devices from the source, clone our runevm_fl repository, and run the following commands.

$ cd runevm_fl/examples/

$ flutter install # Installs HOTG2048 on your connected device. Play the game and share your high scores on our socials!

Join our Community:

Twitter @hotg_ai and @hammer_otg | LinkedIn | Discord | Discourse